About me

Hello there! Thank you for visiting my website. I’m thrilled to share that I recently completed my Master’s degree in Robotics at the University of Pennsylvania. Currently, I am actively seeking job opportunities in the field of computer vision and motion planning. Please feel free to contact me with relevant roles via email.

In my professional career, I have worked as an integration lead at SkyMul (based in Atlanta) and developed end-to-end software pipelines to semalessly integrate with their quadrupedal hardware platform. I have extensively worked with ROS. Before joining Penn, I also worked on feature development for mobile robots for Mowito which is a robotics startup based out of India.

My passion lies in the holistic development of autonomous mobile robots. I aim to build robust software pipelines that can seamlessly integrate with specific hardware requirements. If you would like to learn more about my qualifications and experience, please find my resume here.

Research Experience

mLAB- Autonomous Go-Kart Group | GRASP-UPenn

Latest Update:- UPenn are the WINNERS of the Autonomous go-kart challenge hosted at Purdue University!!!

Throughout my active participation in this competition for two semesters, I acquired valuable experience in the implementation of both reactive and pre-mapping based controls. This practical engagement enabled me to apply the theoretical concepts I learned during my studies at UPenn, effectively reshaping and putting them into practice. Furthermore, my proficiency in developing software pipelines and leveraging the capabilities of the Robot Operating System (ROS) proved to be advantageous in this context.

The competition itself was divided into two distinct parts. In the first phase, our objective was to navigate the track without relying on a pre-mapped path, instead adopting a reactive-based approach to control the autonomous go-kart. Our goal was to react to the observed track in real-time, ensuring safe operation throughout five laps to secure victory. To achieve this, we decided to leverage the power of image-based grass detection using a single monocular camera. By transforming the camera’s image into a pre-calibrated bird’s eye view and adjusting depth measurements on a per-pixel basis, we were able to obtain an extremely reliable lane detection capability. These detections were then converted into a Laser Scan format, which served as input for our gap-follow algorithm. Our approach demonstrated solid performance, especially under favorable lighting conditions. You can watch the back-end visualization of our algorithm and the actual run below.

In the second part of the competition, our team was tasked with implementing pre-mapping based controls.To secure a victory in this coategory, the requirement was to complete a total of five laps within the shortest possible time. My specific responsibility involved the development of a lightweight Python library capable of fusing data from multiple sensors, such as GPS, IMU, and Wheel Odometry, using a partial state update. You may be wondering why we opted to create a separate library instead of utilizing existing open-source options. It’s a valid question, and initially, we started the project by leveraging the well-known Robot Localization library in ROS. However, upon careful consideration, we determined that using this library would be excessive for our specific requirements. Taking inspiration from its approach, I devised a partial update scheme for the Extended Kalman Filter (EKF) algorithm, which proved to be highly effective for our needs.

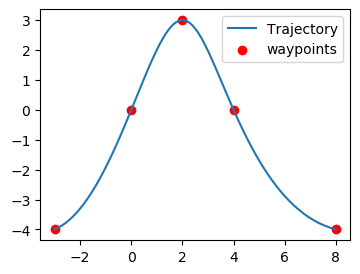

To integrate this localization approach with the pure-pursuit algorithm, we established pre-mapping pipelines. Initially, we manually drove the go-kart, collecting waypoints along the track using our sensor fusion system. These collected waypoints served as the foundation for creating a path that the pure-pursuit algorithm would follow. We employed a simple linear interpolation scheme to generate a smooth and accurate trajectory for the pure-pursuit algorithm to track. I invite you to watch our winning pure-pursuit run in the competition below.

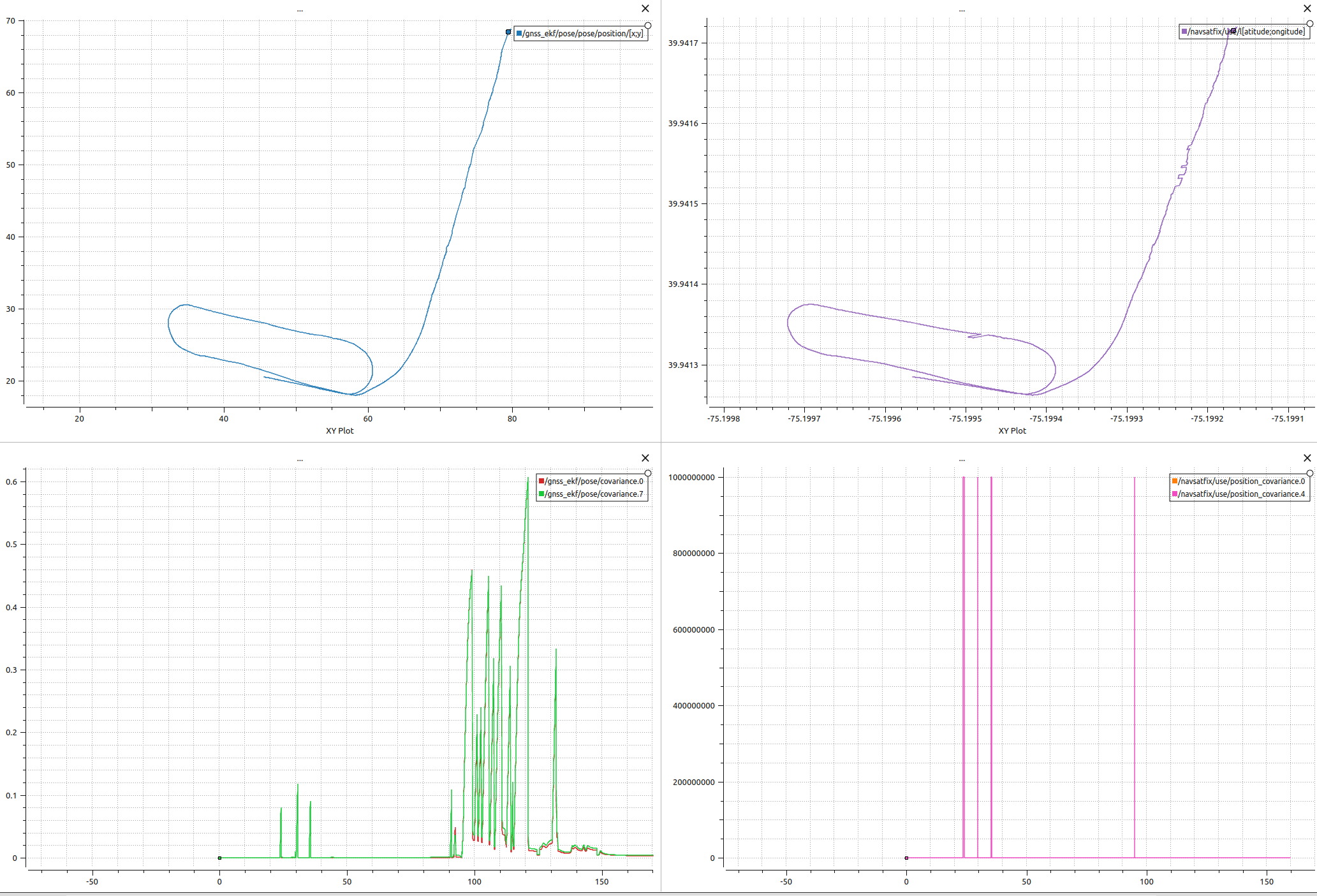

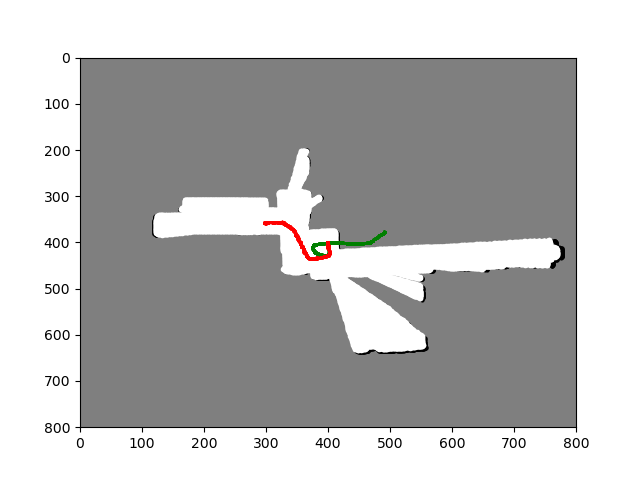

The following is the final result of EKF fusion using GPS and IMU. The pipeline consists of first filtering out GPS jumps and then using an EKF to fuse GPS positions with IMU measurements. The results below show the filtered results on the upper left hand side, the fused covariance on the lower left hand side. The raw data of the GPS and its covariance are shown on the top and bottom right respectively.

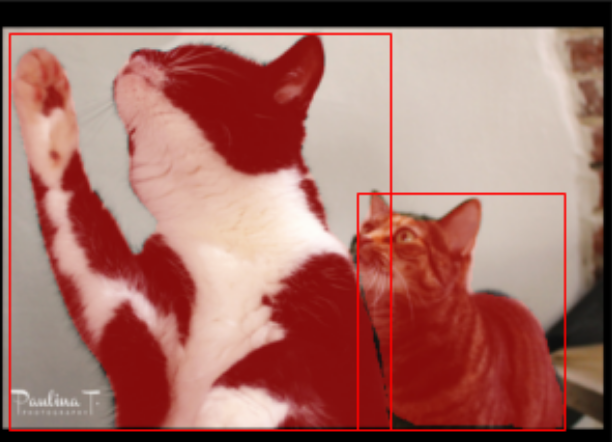

As part of exploring alternate approaches for future competitions, I am currently working on fusing cone detections from both LIDAR and camera sensors. This ongoing project involves various stages, including calibration and the development of separate cone detection pipelines. We have also implemented an overlay of camera detections using YOLO-v7 to filter the point cloud data. Our ultimate objective is to utilize this robust and fused pipeline to perform Simultaneous Localization and Mapping (SLAM) using cones as landmarks. I invite you to have a look at the current results below.

Professional Experience

Skymul (Atlanta) - Integration Lead

My role at Skymul had been to integrate hardware components and create an end-to-end software pipeline for performing waypoint navigation on a mobile platform. During the period of my internship, I’ve had the opportunity to interact with hardware and learn how to use software drivers to integrate them into a complete system. I worked mainly on integrating three different hardware platforms with the robot. The aim was to test the accuracy of these sensor platforms and decide the best for the robot’s task at hand.

Mowito Robotics (Bangalore) - Robotics Engineer

My work was majorly focused on feature development of this controller for client-specific applications of mobile robots (results of this work are shown below). I was also involved in porting the Maxl controller to ROS2 for benchmarking and making the Maxl controller library independent of ROS.

Projects

Object detection and instance segmentation

Implemented YOLO, SOLO, and Faster-RCNN pipelines for object detection and instance segmentation tasks from scratch. Performed post-processing and analysed performence using mAP metric. GitHub

Path planning approaches for a planar quadrotor

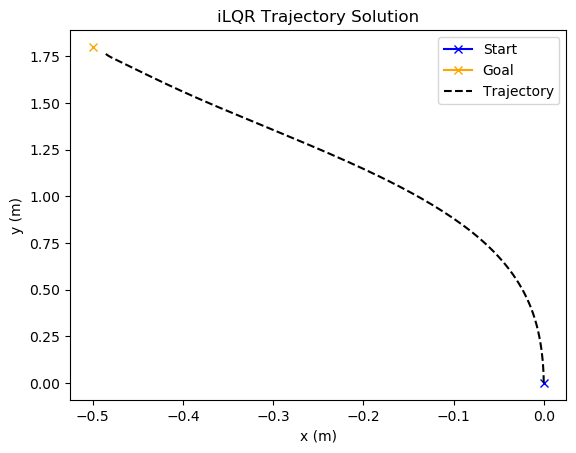

This project was a semester long implementation of several methods implemented for planar quadrotor control as a part of the MEAM 517 (Control and Optimization with learning in Robotics). These methods include MPC, iLQR, LQR to follow a nominal trajectory, and minimum snap trajctory planning in differtially flat space of the quadrotor. The results for the same are as below. GitHub

Two-View and Multi-View Stereo for 3D reconstruction

The aim of this project was to use two view and multiple view images to form a 3D reconstruction of the object of interest. For multi-view stereo the plane sweep algorithm was implemented. GitHub

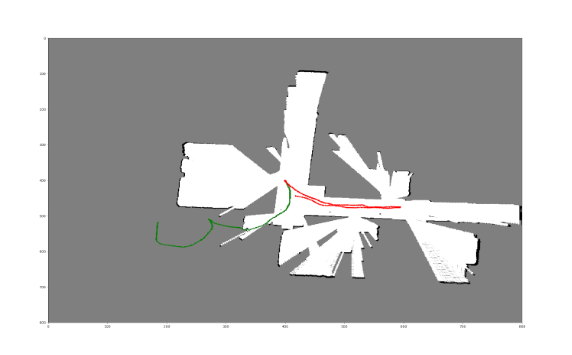

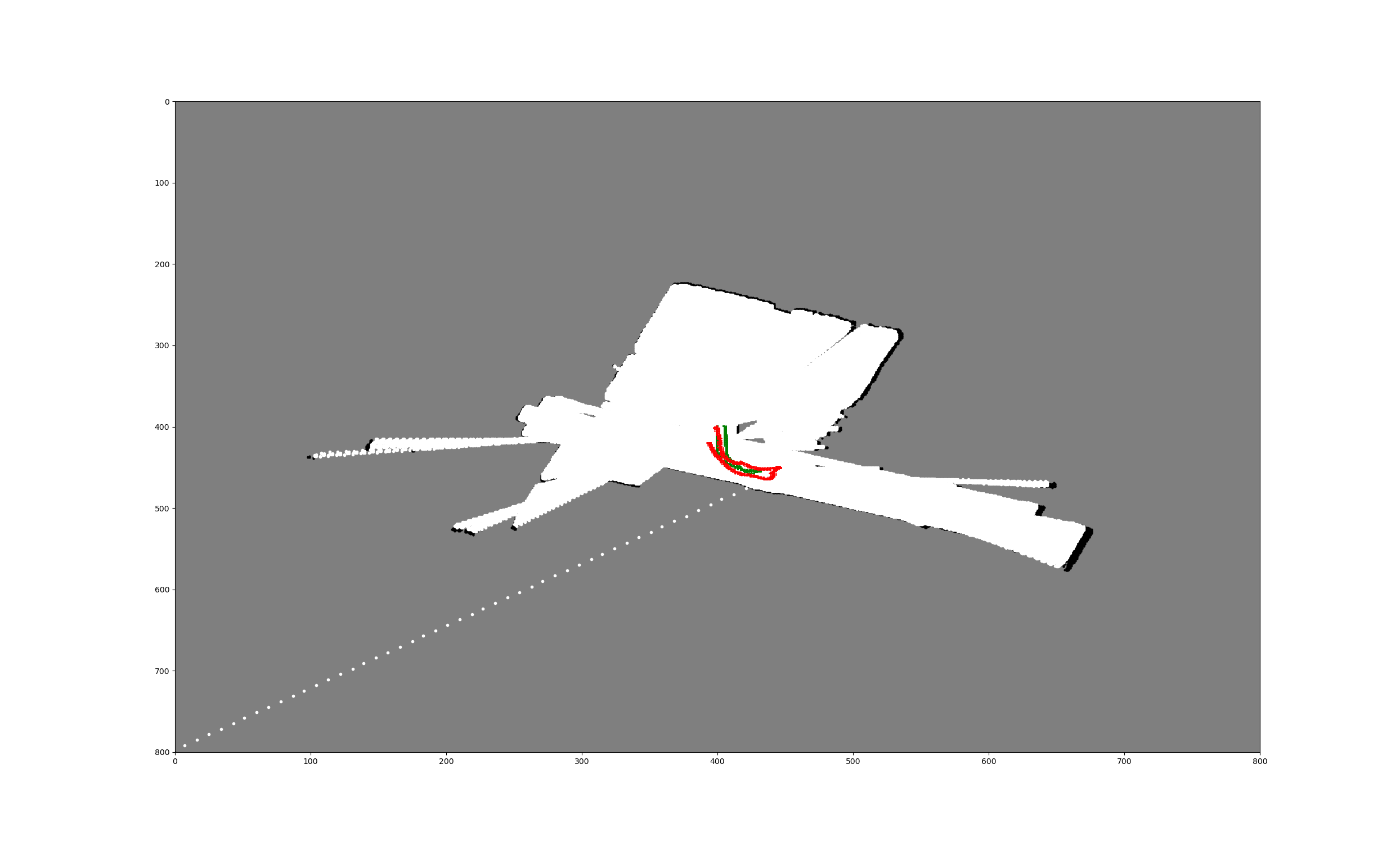

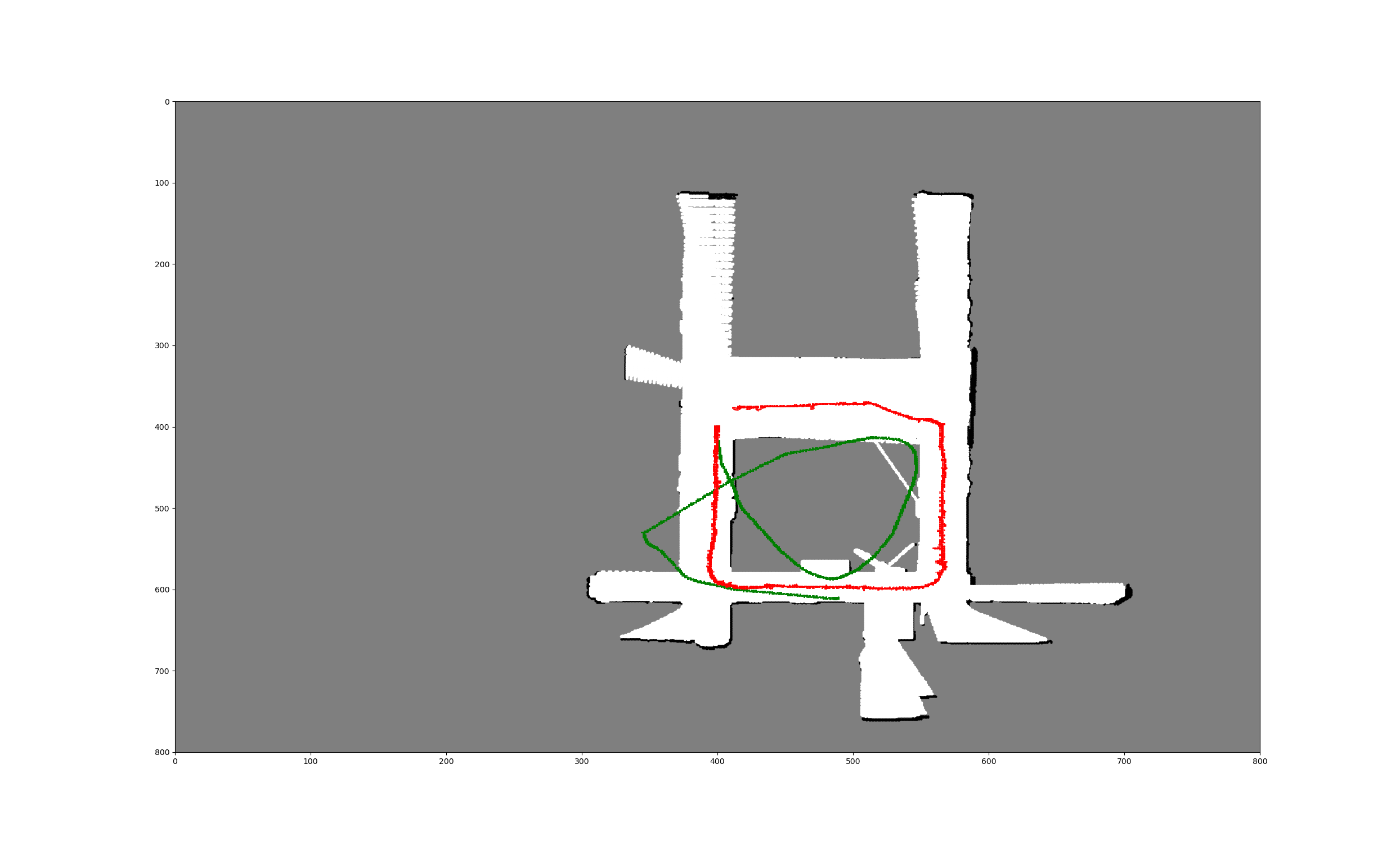

SLAM using Particle Filter for humanoid Robot

The aim of this project was to perform particle filter based SLAM using the IMU and the LIDAR data from a THOR-OP Humanoid Robot. The IMU data avaialble was filtered and used with lidar data to perform SLAM. The lidar data is transformed into the map co-ordinates by applying suitable transformations. Based on the paricle filter approach the best particle with maximum correlation is chosen and the log odds of the map is updated. This scan-matching technique is used to update the obstacles in real-time on a gridmap as well as localize the robot in the world. GitHub

Autonomous Pick and Place Challenge

The aim of this project was to use the library features developed for the Franka Panda Arm during the semester to develop a strategy for picking up static and dynamic blocks and placing them on the reward table. The aim of the strategy was to maximize the score and minimize the time. The Github repository for the entire project can be found here.